The Role of Chaos Engineering in Developing Resilient Systems

The rapid development of distributed software systems is changing the rules of the game in software engineering. Today, organizations are adopting innovative development practices to ensure the security, resiliency, and scalability of their systems.

However, despite all the advantages of modern development methods, the question arises: how confident are we in complex software systems?

Even when all the individual services in a distributed system are functioning as expected, interactions between those services can produce unpredictable results. These results, coupled with rare but destructive events, make systems non-fault-tolerant.

Chaos engineering is an effective method to make the functioning of a software product reliably predictable.

What is chaos engineering, and how does it contribute to creating resilient systems? Next, we will find answers to these questions.

What is chaos engineering?

Chaos engineering is the conduct of thoughtful and planned experiments that show how a system behaves in unexpected situations.

Chaos engineers create stressful conditions for the system and try to find possible failures before something terrible happens.

Chaos engineering embraces the philosophy of "breaking things on purpose" instead of hoping a system will remain robust under any conditions. It uncovers vulnerabilities before they become catastrophic failures and helps engineers observe how a system responds under stress.

The main purpose of chaos engineering is to identify weak points that may otherwise remain hidden until a real-world incident occurs. It enables organizations to understand how resilient their systems are in adverse conditions and develop strategies to swiftly mitigate and recover from failures.

When did chaos engineering appear?

The concept of chaos engineering was first proposed by Netflix in 2012. They came up with Chaos Monkey, a tool that introduces various errors into the infrastructure and business system.

Netflix created Chaos Monkey when migrating from physical infrastructure to the AWS cloud.

They wanted to ensure that the loss of an Amazon instance would not impact streaming stability. Chaos Monkey randomly terminated virtual machine instances and containers in production. This allowed engineers to immediately see whether their services were resilient enough to cope with unplanned failures.

The fundamentals of chaos experiments

Chaos experiments are carefully designed tests conducted to discover system weaknesses and vulnerabilities. These experiments serve a crucial purpose: to simulate real-world issues in a controlled environment and observe how the system responds.

Chaos experiments involve a few core components:

Hypothesis - initial assumption of how the system will behave under certain conditions. For example, you might hypothesize that increased network latency will result in slower response times.

Experiment design - specific parameters of the experiment, including what disruptions will be introduced and how they will be measured.

Execution - сhaos experiments are executed in a controlled manner. The teams use chaos engineering tools to simulate disruptions like network failures, server crashes, or increased load.

Observation and analysis - logs and metrics that help understand the impact of the disruption on the system.

Types of chaos experiments

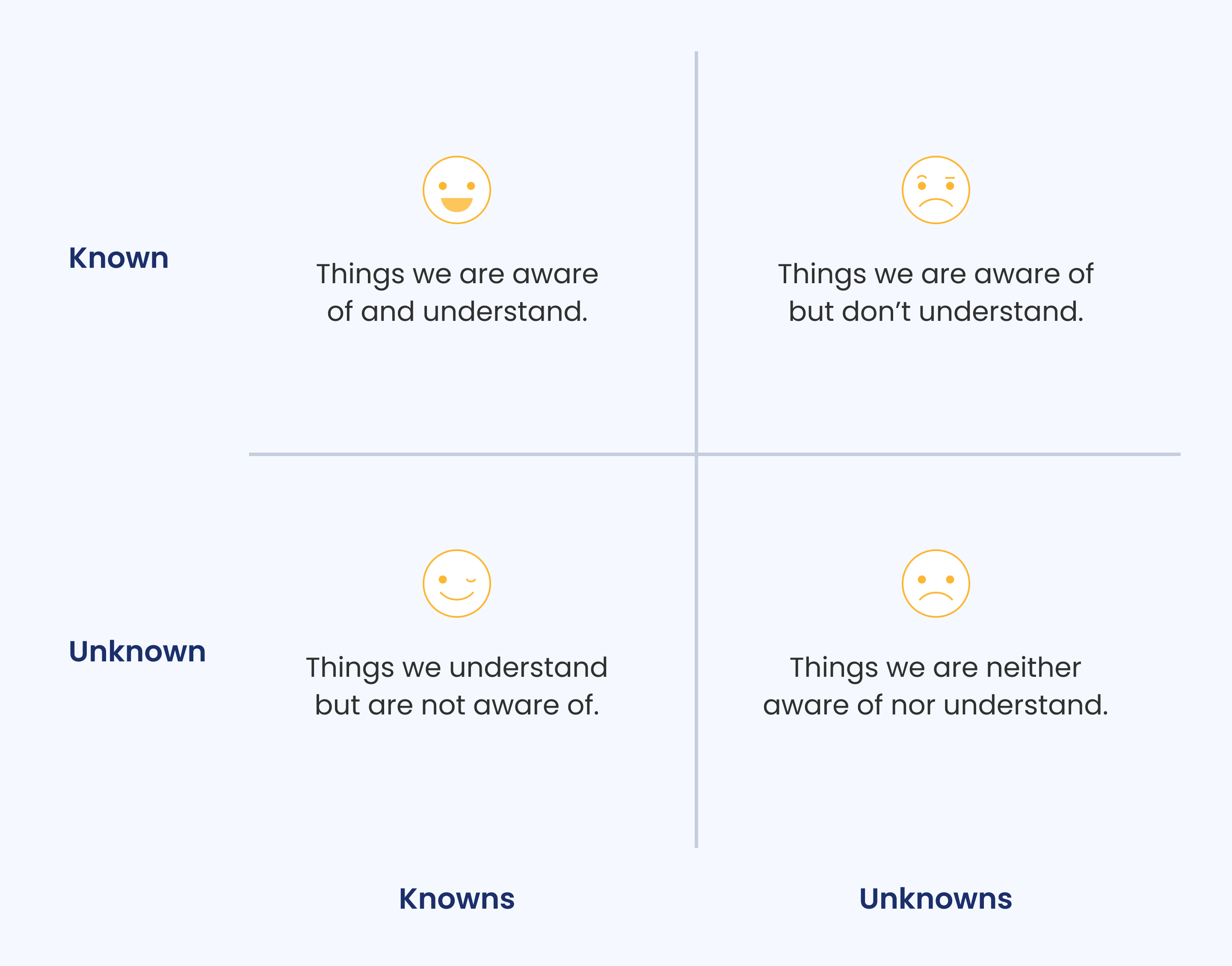

There are four types of chaos experiments used to check resilient systems:

Known Knowns - things you know about and understand

Known Unknowns - things you know about but don't understand

Unknown Knowns - things you understand but do not know about

Unknown Unknowns - Things you neither know about nor understand

Examples of chaos experiments

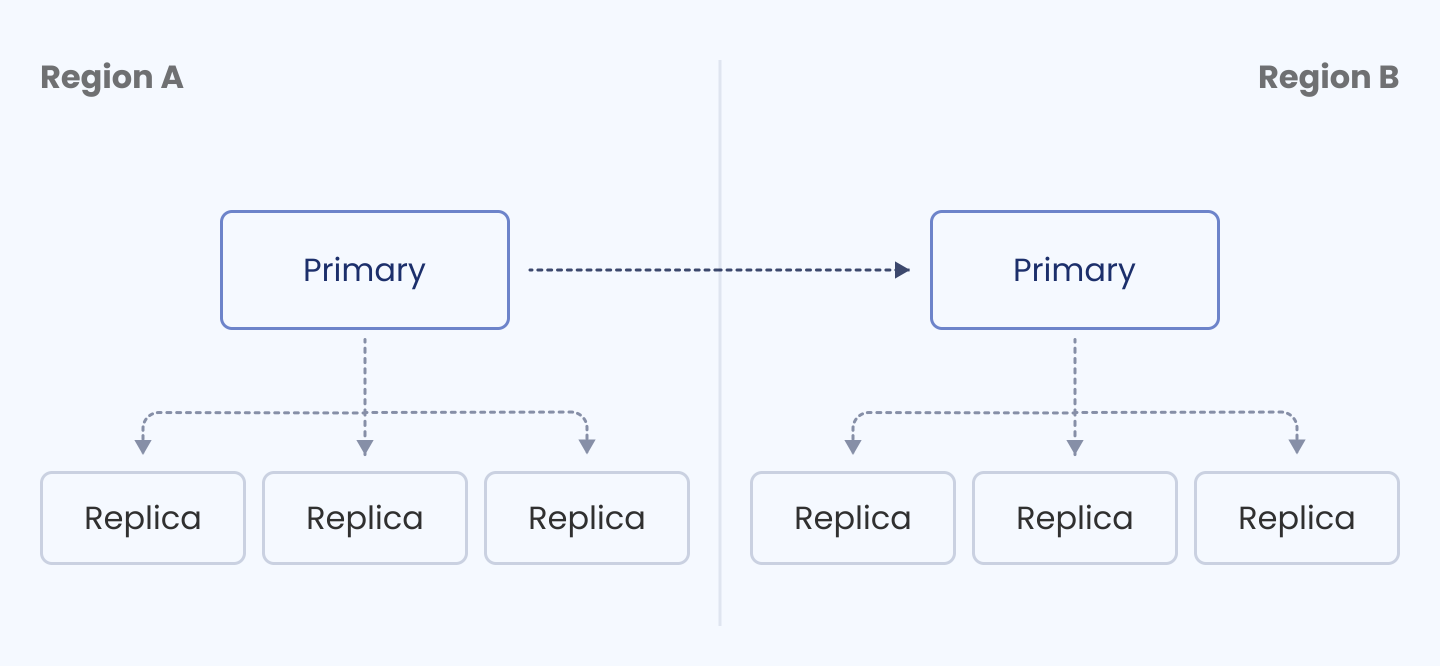

Scenario: We have a database with two clusters in two geographic regions, each consisting of a primary server and three replicas. We run chaos experiments to test incidents related to database replica failures.

Known Knowns

Known information

When a replica shuts down, it is removed from the cluster. A new replica is then cloned from the primary and added back to the cluster.

Experiment

Turn off a single replica of a cluster from region A.

Expected outcome

Ensure that the new replica is cloned and added to the cluster.

Known Unknowns

Known information

Logs that show if the clone creation is successful or not.

Unknown information

An average time from experiencing a replica failure to adding a clone to the cluster.

Experiment

Turn off a single replica of a cluster from region A.

Expected outcome

Record the duration it takes to detect the shutdown, remove the replica, initiate the clone, complete the clone process, and reintegrate the clone into the cluster.

Unknown Knowns

Unknown information

An average time for creating two replica clones under peak load.

Known information

The third replica will still handle the transactions if the other two are shut down.

Experiment

Before conducting an experiment, add the fourth replica to the cluster of Region A. Regularly turn off two replicas of the Region A cluster at the peak period (say, on Friday evenings or holidays) for several months. Observe how much time it takes to clone replicas at peak load.

Expected outcome

Identify issues related to cloning replicas at peak period. For example, you may find out that the primary server cannot handle the traffic processing and replica cloning simultaneously. In this case, you should look at ways for tech optimization.

Unknown Unknowns

Unknown information

How the Region B cluster will handle the load if the Region A cluster is disabled.

Experiment

Shut down the Region A cluster, including the primary server and replicas.

Expected outcome

See how the Region B cluster handles the load. Identify an action plan in case such a shutdown happens in the real world.

Understanding blast radius and its impact on experiment design

When conducting chaos engineering in resilient systems, it's crucial to consider blast radius. The blast radius is the potential scope of a disruption introduced during an experiment. In other words, it's the extent to which chaos can affect different parts of the system.

Understanding and managing the blast radius is paramount because chaos engineering aims to uncover vulnerabilities without causing widespread harm to the system. Experimenters must balance creating realistic scenarios with minimizing the risk of harming critical systems.

To manage the blast radius effectively, chaos engineers often start with smaller, controlled experiments. They increase the experiment's scope as they gain more confidence in the system's resilience.

Remember our example with database replica failures. We started by shutting down a single replica and eventually shut down the entire cluster. We gradually increased the complexity of the experiment to understand how the system reacts to certain actions and to prevent irreversible consequences.

This iterative approach allows for uncovering vulnerabilities while keeping potential harm in check.

Getting started with chaos engineering

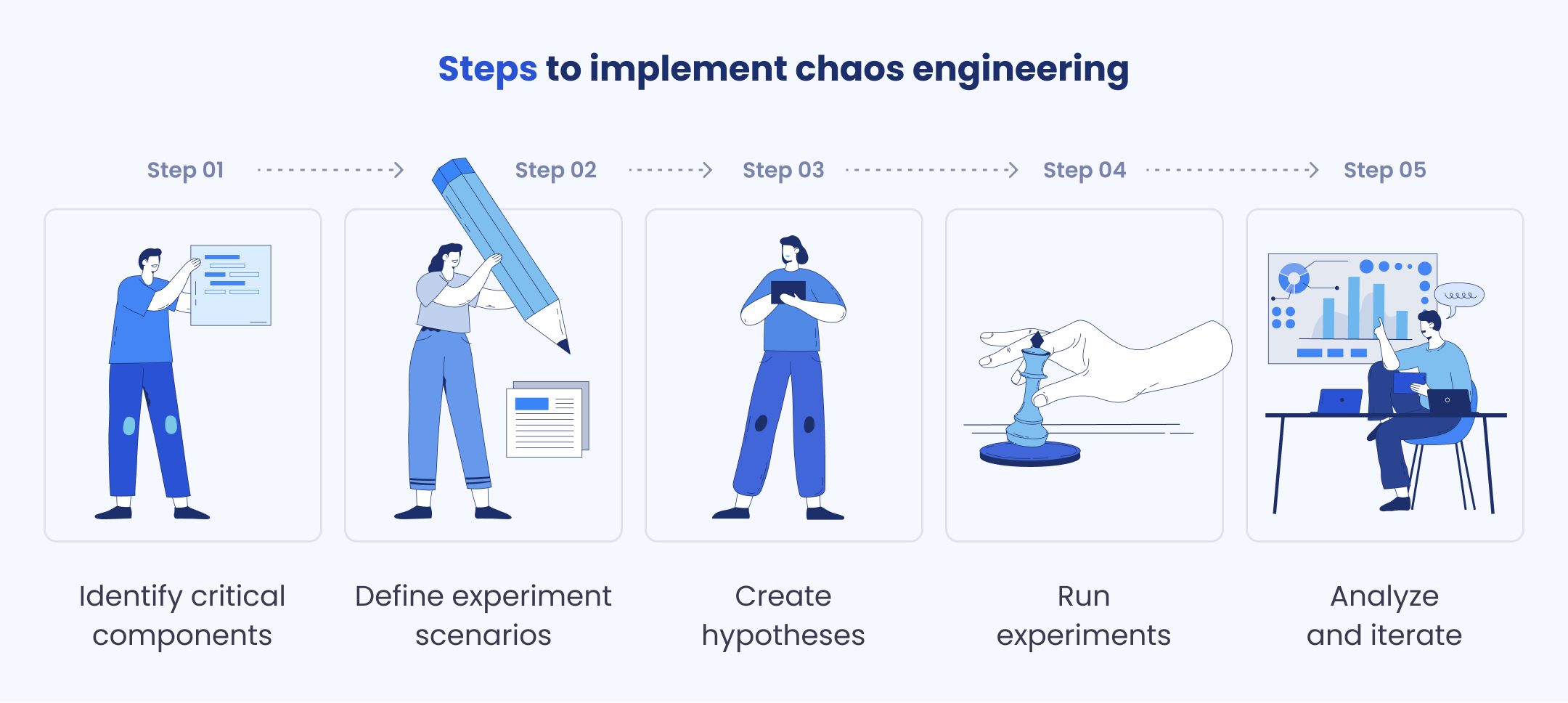

So, you're ready to try chaos engineering and start your journey towards building more resilient systems. Here's a step-by-step guide to help you get started:

Identify critical components

Begin by identifying the core components and services that, if disrupted, would have the most significant impact on your users and your business. These may include databases, load balancers, or external APIs. By prioritizing software components, you can maximize the effectiveness of chaos experiments.

Define experiment scenarios

Chaos engineering experiments should be targeted and well-defined. For better effect, create a step-by-step plan and identify what actions will be taken, what tools will be used, what system components will be targeted, and who will be responsible for the specific actions.

Create hypotheses

Before executing an experiment, formulate hypotheses about how you expect the system to respond to the disruption you're introducing. For instance, you might hypothesize that increased network latency will lead to degraded system performance. The hypotheses help you measure the actual impact against your expectations.

Run experiments

With your critical components identified, scenarios defined, and hypotheses in place, it's time to run your chaos experiments. This involves introducing controlled disruptions to the system, such as injecting network latency, killing server instances, or overloading a service with requests. It's essential to conduct experiments in a controlled and measured manner, using the chaos engineering tools that best suit your infrastructure.

Analyze and iterate

After each experiment, gather data on how the system behaved, whether it met or deviated from your hypotheses, and how quickly it recovered. Analyze this data to identify areas for improvement. This could involve making architectural changes, implementing redundancy, or refining error-handling mechanisms.

Common misconceptions about chaos engineering

Organizations may neglect chaos engineering because they have some misconceptions about it. Here are the most common of these:

Misconception 1: Chaos engineering is too disruptive

In reality, while chaos engineering does involve some disruption, it's carefully controlled and can be conducted in ways that minimize impact. The goal is to discover vulnerabilities before they lead to uncontrolled and majorly disruptive incidents.

Misconception 2: Chaos engineering is time-consuming

In reality, the investment in proactive testing saves significant time in the long run by preventing emergency fixes. The time spent on chaos engineering can lead to more reliable and efficient systems.

Misconception 3: Chaos engineering is only for large organizations

In reality, chaos engineering is not exclusive to large organizations. It can be valuable for businesses of all sizes. The chaos experiments are designed considering the software specifics and project goals.

Misconception 4: Chaos engineering replaces traditional testing

In reality, chaos engineering is not a replacement for traditional testing but rather a complementary practice. While traditional testing is essential for validating expected system behavior, chaos engineering focuses on uncovering unexpected weaknesses.

Chaos engineering tools and resources

Numerous tools and resources are available to help you implement chaos engineering. While we won't discuss these tools in depth here, it's important to be aware of some key brands in the chaos engineering space. They include:

Chaos Monkey. Developed by Netflix, Chaos Monkey is one of the pioneering tools in chaos engineering. Chaos Monkey terminates instances in production to check if the services are resilient to instance failures.

Chaos Toolkit. An open-source tool that allows you to define, run, and report on chaos experiments. Chaos Toolkit supports multiple platforms and extensions and allows running chaos tests in different IT environments.

Gremlin. Gremlin provides a controlled environment where you can run chaos experiments to observe how the system responds under adverse conditions.

Chaos Mesh. Chaos Mesh is designed to simulate various types of faults to Kubernetes and orchestrate fault scenarios.

Litmus Chaos. An open-source platform for chaos engineering that allows teams to run controlled tests and identify potential weaknesses and outages.

Conclusion

In a modern technological landscape, resilient systems are more critical than ever. Chaos engineering is a proactive approach to enable software resistance to unexpected failures. By simulating disruptive events in a controlled environment, chaos engineering prevents major issues in real conditions.

At Erbis, we help organizations identify and mitigate vulnerabilities in software systems by offering comprehensive chaos engineering services.

Our team follows best practices, utilizes industry-standard tools, and conducts controlled chaos experiments, ultimately leading to enhanced user experiences and cost savings.

Need help with executing chaos engineering in your project? Don't hesitate to get in touch.