ETL in action: when data rules the world

In the era of data-driven business, it is vital to maximize the usefulness of the information you receive. Companies receive massive amounts of data from different sources. However, not all types of data are of value to the organization. A streamlined process for extracting, transforming, and loading (ETL) data contributes to the optimal allocation of company resources and the rapid achievement of business goals. In this post, we will learn how to overcome challenges in the ETL process and set up an efficient data pull.

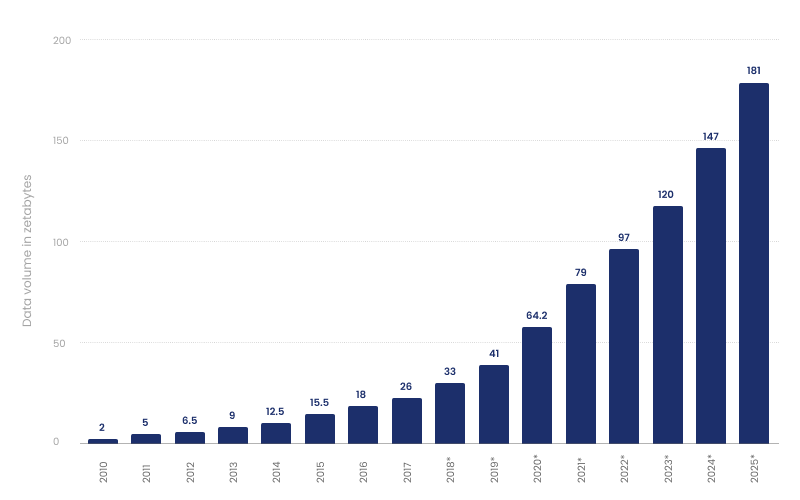

VOLUME OF DATA/INFORMATION CREATED, CAPTURED, COPIED, AND CONSUMED WORLDWIDE FROM 2010 TO 2025

Five challenges for ETL to face

The biggest challenge when establishing ETL systems is defining the right goals and choosing appropriate ways to achieve them. Therefore, before you start building the ETL pipeline, it is necessary to answer the following questions:

Where are you going to extract the data from?

What data warehouse tools are you going to use?

Do you have a qualified ETL team?

What benefits do you aim to receive from ETL development?

Why is this project important for your organization?

Once you’ve identified the ultimate goals, you can proceed to more specific tasks and solve problems related to their implementation. Here are the main challenges you are going to face:

Data change

The first stage of ETL development is to extract raw data from various sources such as:

databases

CRM (customer relation management) systems

marketing tools

analytical systems

mobile devices

data storage platforms

etc.

Data formats may change over time. That is why ETL developers should provide a mechanism for adding new columns, dimensions, and derivatives to react to changes in time and not miss necessary information.

Data diversity

Data from different sources come in a disordered form and must be unified before storing in the warehouse. For example, your CRM system may import the user’s location in the USA format, and the client application may use the US format. To put all American users in a single column, you need to implement a cleansing mechanism to join the USA and US records together. Even though most data warehouses can process data on their own, it is better to use an extra step of filtration. It will help to achieve more precise structuring and provide more reliable information for the end decision-makers.

Tech stack selection

The mechanism responsible for delivering data to the warehouse consists of many components. First, you need to integrate with various data sources, then convert the extracted data into a readable format and, finally, upload it to the warehouse. Each of these ETL steps is implemented as a separate function of the data delivery pipeline. Therefore, it may be required to use different tools and technologies for each feature rather than rely on a single stack for the entire data processing solution.

Scalability

Are you planning to expand your business? If the answer is yes, get ready for data growth. Data generated earlier at one hundred lines per minute may turn to thousands of lines per second in the future. That is why the growth opportunity must be anticipated and supported by the relevant technologies. If this is not done, ETL developers will have to redo the entire pipeline as the data grows and expands.

Resource consumption

To work with databases, you need to create structured query language (SQL) queries. If not designed properly, they can consume extra resources and reduce system performance. For example, suppose there is an object that has two many-to-many relationships. In that case, it may be more efficient to select data with two different queries rather than use a single query with multiple joins. This will help avoid the multiplication of the number of rows selected from the database.

ETL best practices

ETL methodology does not have a defined algorithm of implementation. However, it has the best practices which allow making the ETL process smooth and effective. Here is what you should do to boost ETL development in your organization:

Reduce data input. Don’t overload your ETL data warehouse with unnecessary entries. The less data you extract, the faster and easier it will be to process it. So don’t try to import redundant records just to scrap them later.

Apply incremental updates. To make ETL integration even faster, you should update only newly received data in your pipeline rather than the whole data set. While this technique requires more effort to be implemented, it certainly pays off with better results.

Enhance data quality. Make sure high-quality data is processed throughout the entire ETL cycle. To do this, use special tools to help you refine the data at the input and during further processing.

Implement concurrency. You can save a lot of time by running several ETL integrations at the same time. With automated ETL solutions, you can implement parallel processing to the extent that your infrastructure capabilities allow.

Minimize databases. ETL engineers can significantly speed up the ETL process by limiting the size of databases and tables. Therefore, if you need to increase ETL performance, just split large tables into smaller ones.

Use caching. By keeping copies of frequently used data, you can establish quick access to them and reduce the time for ETL integration.

Track results. You need to track ETL results to continually improve the ETL process and data handling. Therefore, set ETL metrics and indicators that you can compare over different periods of time.

Top-5 alternatives to ETL framework

ETL frameworks are a foundation for developing ETL software written in a specific programming language. These are reusable collections of packages and modules that standardize the app development process by providing common functionality and an organized development approach. Developers use ETL frameworks to implement ETL functionality in their software projects. However, some companies may not want to hire ETL developers and implement the ETL pipeline. In this case, they can opt for alternative tools to run their data processing mechanism. Here are the primary tools to pay attention to:

Panoply

Panoply is an automated AI-based tool that synchronizes data from various data sources and stores it in an easy-to-access table. Panoply does not require technical skills. Therefore, it can be utilized by non-technical staff without special knowledge and preparation.

Despite the advantages, Panoply’s capabilities are limited when using SSH tunneling or a more complex database structure. So, it is better to look for other solutions for large IT businesses with their own database structures.

Matillion ETL

Matillion ETL is a cloud-native tool providing an easy way to extract, migrate, and transform your data in the cloud. Matillion effectively processes the data and prepares it for further usage by major analytics instruments like Looker and Tableau. Additionally, its user-friendly interface helps manage data flows without SQL coding.

Some drawbacks of Matillion ETL are poor scheduling and slow responses from a support team. Besides, it does not have any looping methodologies and cannot handle comma-separated variable (CSV) response API calls using the native API client.

Skyvia

Skyvia is a web service for cloud data integration and backup. It offers ETL tools for connecting cloud CRM with other data sources and enables complete control over business information flows. Skyvia uses SQL to view and manipulate data records and allows easy integration without programming skills.

The cons of the Skyvia include slow synchronization, limited file management capabilities, and lack of real-time support.

Alooma

Alooma is a cloud-based data integration platform designed to bring data from target sources to the company’s warehouse. It provides vast opportunities for horizontal scalability and takes care of information security by applying robust encryption techniques for data in motion.

The users, however, admit that Alooma has a rather complex interface, a small variety of features, and has difficulties in handling the increasing data pipelines. Besides, it does not work on desktop and mobile platforms and has no free version.

Xplenty

Xplenty is a data integration platform used to create custom data pipelines for better decision-making. Xplenty allows an effortless setup with no extensive coding. It can combine data from various sources and send them to a single destination for further management and control.

While Xplenty is suitable for small start-ups, it may not meet all the needs of large companies. The existing users claim it lacks advanced features and customization. Besides, the Xplenty tool is quite expensive.

Where to hire ETL developers

In the modern world, organizations receive large amounts of information from various sources, but not all are of value to the company. A properly designed approach to extracting, transforming, and loading data is the first step along the path of business growth. To be successful, you need to build an ETL pipeline to pull data from heterogeneous sources into your system. Hiring in-house developers for this task does not make sense for many organizations because some view it as a one-off project and others need an experienced team that can start immediately. Also, using alternative automated solutions does not work because ready-made tools cannot meet the expectations of every business. They often lack vital features and have expensive subscription fees.

In this regard, many businesses choose to outsource ETL development abroad. ETL outsourcing provides fast access to experienced programmers who have already implemented a number of similar projects. The client receives a smooth workflow with well-established implementation schemes and adopted development tools.

If you are thinking of hiring a remote team, but do not know where to find such a resource, consider ETL outsourcing to Ukraine. This country has a vast talent pool and affordable prices. In addition, Ukrainian developers are recognized as highly qualified specialists, and their products are popular worldwide. You can book a consultation with a manager to clarify all the details of this cooperation model and then decide whether it works for you.