Handling Latency and Performance Challenges in Real-Time Apps

Real-time applications deliver information to users with minimal delay, typically within milliseconds. These applications immediately respond to user input and cannot tolerate any latency in data processing.

Real-time applications are used in various domains, such as communication tools, financial trading platforms, online gaming, live streaming, and collaborative editing software.

If you face performance challenges in your real-time app and are looking for ways to reduce latency, this post will help you get started.

Challenges of real-time apps

The primary objective of real-time applications is to offer an experience that is as close to real-time as possible. However, real-time applications face various challenges in terms of latency and performance.

The delay between an action and its corresponding response. This challenge of application latency and performance results from network congestion, server processing time, or inefficient data transmission protocols. Latency often occurs due to the time it takes for data to travel between the user's device and the application server. Additionally, latency can occur due to the processing time required to handle the user's request on the server side.

Synchronization problems when multiple users interact simultaneously. Imagine a scenario where multiple users are collaborating on a shared document or participating in a multiplayer online game. In such cases, synchronization is crucial to ensure that all interactions are accurately reflected and sequenced across all connected devices. Challenges may arise due to network delays, conflicting actions, or latency issues, potentially leading to disruptions in the user experience.

Scalability as the app's user base grows. This issue occurs when the app database struggles to handle increasing volumes of data or concurrent user requests. Another reason is inefficient resource utilization, where certain app components become overwhelmed as the user base grows. Moreover, poorly optimized code and hardware limitations can lead to longer processing times.

Strategies to minimize latency and improve performance in real-time apps

Low latency and high performance are essential for a great user experience. There are various strategies to minimize latency and enhance performance in real-time applications.

Employ predictive algorithms to pre-fetch data

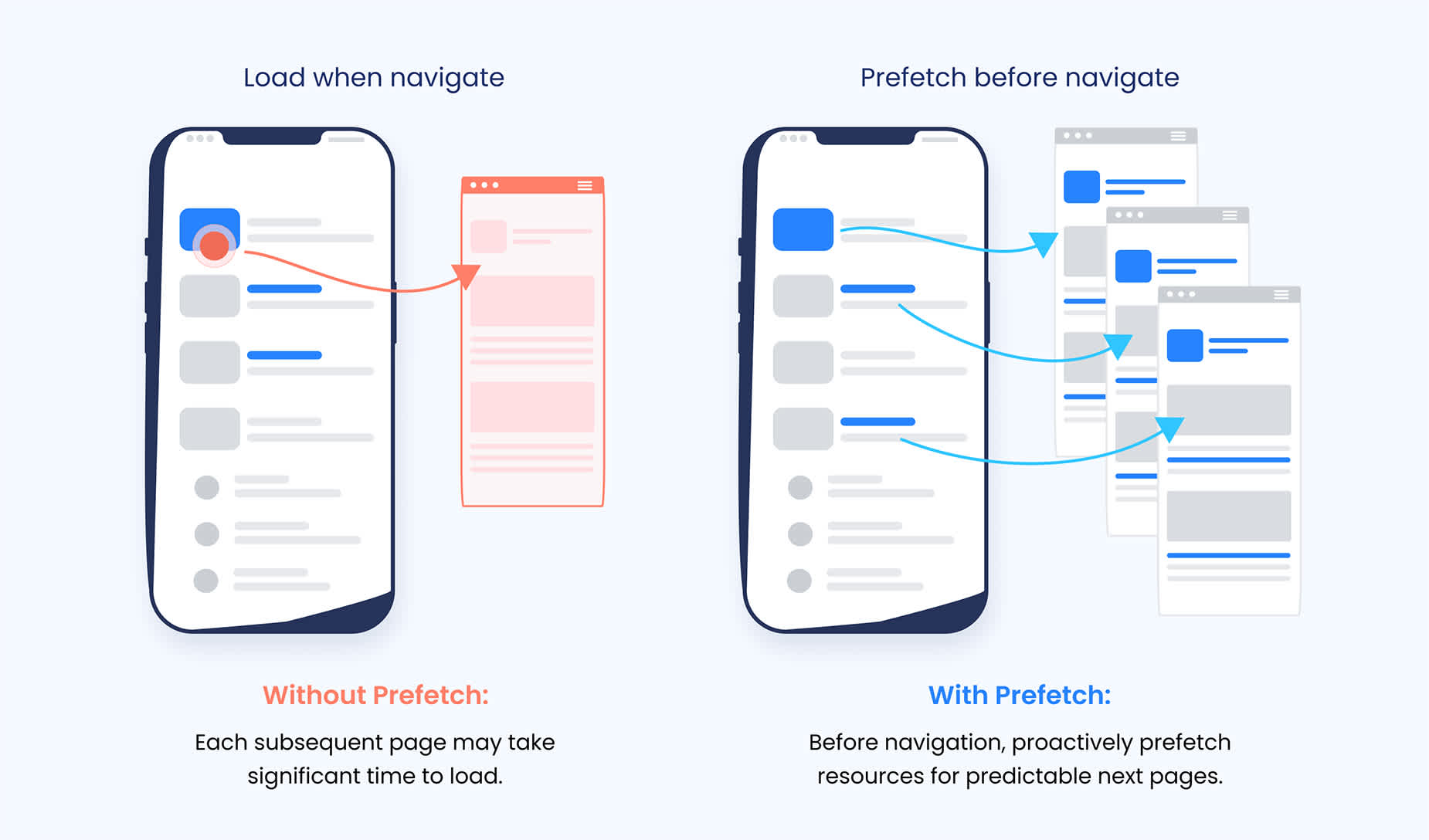

Pre-fetching means retrieving data in advance before the user actually needs it. Thus, when the user requests specific data, it is delivered immediately, and the user doesn't need to wait for it. Such a technique minimizes latency in app performance and streamlines UX.

Predictive algorithms can analyze user behavior and historical data to anticipate future needs and fetch relevant information. Here's how it works:

Data analysis: collect and analyze user interactions and usage patterns to predict future actions.

Prediction models: develop predictive models that forecast data users will likely request.

Pre-fetching mechanism: use predictive models to retrieve and cache the anticipated data.

Background processing: execute pre-fetching in the background or during idle periods to minimize impact on user interactions.

Tools to implement data pre-fetching

Machine learning libraries. TensorFlow, scikit-learn, and PyTorch provide powerful tools to build predictive models. These libraries offer various algorithms to train ML models to analyze user behavior and make predictions based on historical data.

Data analytics platforms. Google Analytics, Mixpanel, and Adobe Analytics provide insights into user engagement, navigation patterns, and feature usage. This helps build predictive models that pre-fetch data.

Caching solutions. In addition to the client side, pre-fetch may trigger updates on the cache database(Redis,Memcached, etc.) and store pre-fetched data in memory for quick retrieval for various users in the same scenario.

Real-case scenarios of using data pre-fetching

E-commerce platforms. Predictive algorithms can analyze past purchase history, browsing behavior, and product interactions. This allows you to anticipate the products a user is likely to view next and pre-fetch product images, descriptions, and other relevant information.

Enterprise applications. In business environments, predictive algorithms can anticipate the data and reports that employees frequently access. By pre-fetching relevant documents, charts, and analytics data, enterprise applications enhance productivity and streamline workflows for employees.

Warehouse management systems. Suppose a warehouse manager frequently checks the stock levels of certain products. The system can pre-fetch this information from the database and have it ready to display when the manager logs in.

Order tracking solutions. Customers often inquire about the status of their orders. A tracking software can pre-fetch information for pending orders and immediately show it when users access their order history.

Patient records. Medical specialists often need quick access to patient records during consultations. Data pre-fetching can anticipate the patient records that are likely to be accessed based on appointment schedules.

Use appropriate load-balancing techniques and tools

It is essential to choose the most appropriate load-balancing technique for each system or its components. The following methods help to minimize latency and improve performance in real-time applications.

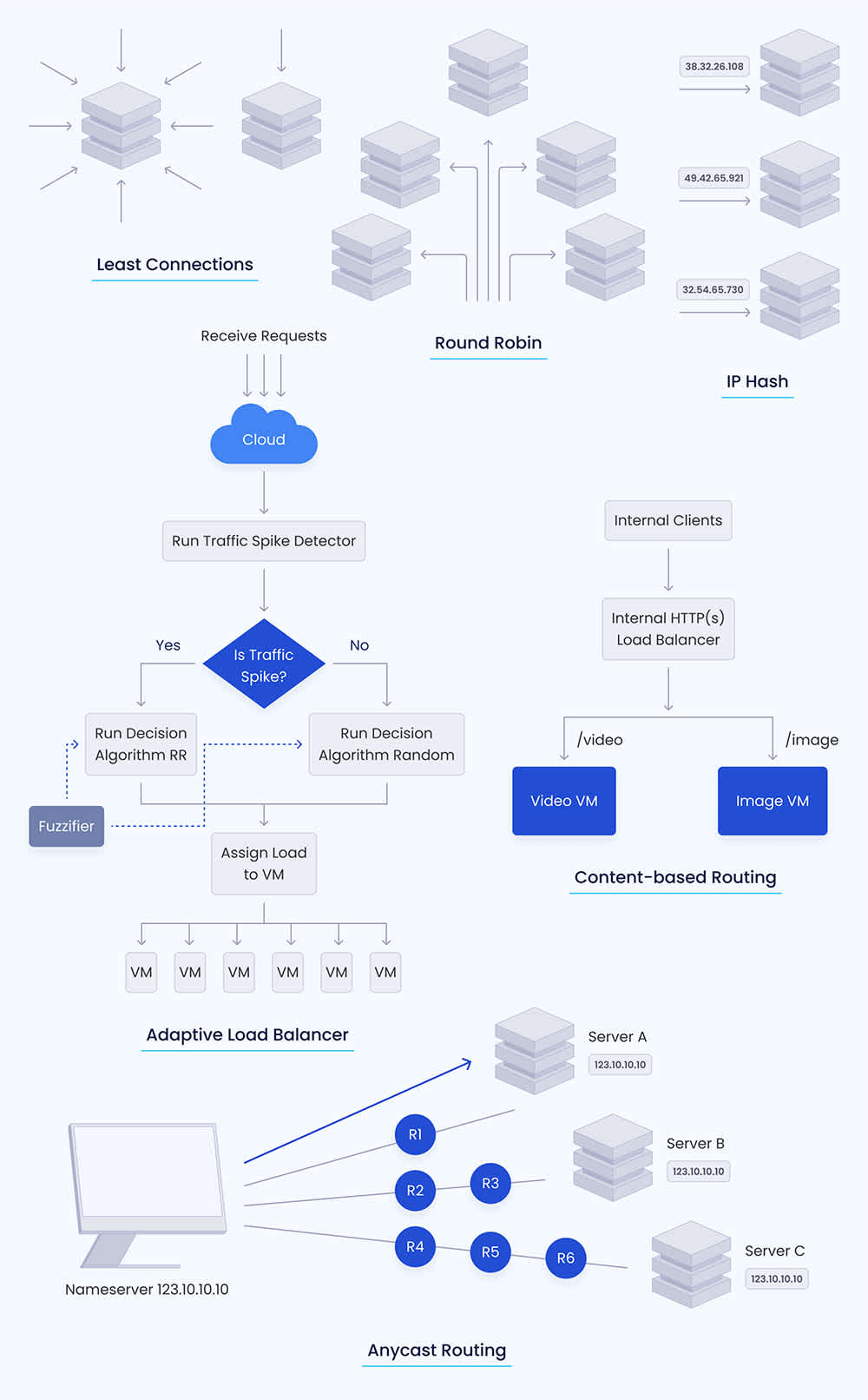

Least connections. This algorithm directs incoming requests to the server with the fewest active connections. It is well-suited for real-time apps as it helps distribute the load evenly across servers, minimizing response times and preventing overloading of any single server.

Round Robin. This distributes incoming requests equally among servers. While simple, the Round Robin technique is effective for real-time apps with predictable workloads. It ensures that each server receives an equal share of requests and reduces the risk of bottlenecks.

IP hash. The IP hash algorithm uses the client's IP address to determine which server to route the request to. This guarantees that requests from the same client are always routed to the same server. It is particularly useful for real-time applications that require session persistence and stateful connections.

Least response time. This routes requests to the server with the lowest response time, measured by the time taken to respond to previous requests. This approach is great for minimizing latency because it directs requests to the fastest-performing servers.

Adaptive load balancing. This makes routing decisions based on real-time performance metrics such as server load, response time, and resource utilization. Adaptive load balancing is great for handling stable performance in real-time apps with fluctuating workloads.

Content-based routing. Content-based routing is a technique that directs incoming requests to specific servers based on the content of the request. This content can be URL paths, HTTP headers, or payload content. By routing requests in this way, performance is optimized by ensuring that servers with the most optimal processing requirements handle specific incoming requests in real time.

Anycast routing. This technique directs requests to the nearest server based on network topology. This helps minimize latency by routing traffic to the server with the shortest network path. It's particularly useful for real-time applications that require global scalability and low-latency communication, such as content delivery networks (CDNs) or distributed messaging systems.

Tools to set up load balancing

You can use the following tools to set up load balancing:

Pure load balancing tools: NGINX, HAProxy, Apache HTTP Server, Envoy Proxy. These tools support various load balancing algorithms, including least connections, Round Robin, IP hash, least response time, and more. They are commonly deployed in on-premises or self-managed environments.

Cloud-based load balancers: AWS Elastic Load Balancer (ELB), Azure Load Balancer, and Google Cloud Load Balancing. These services are provided by specific cloud platforms. They help distribute incoming traffic across multiple instances within a given cloud environment.

Use specific caching methods

It is critical to improve speed by using certain caching methods or a mix of them.

In-memory caching. This stores frequently accessed data in the application's memory instead of retrieving it from the database. In-memory caching creates fast read access and reduces latency by avoiding costly I/O operations.

Distributed caching. This replicates cached data across multiple servers in a distributed system. For example, if you have a trading app and the stock price is updated on one server, the distributed caching will update cached data on all servers.

Query result caching. You can store the results of database queries that are frequently executed to improve performance. For instance, in a data visualization dashboard, a user may ask for specific information such as "Show me sales data for March." The system can cache the results of such a query so that it can quickly display the results when the user requests the same information again.

Content Delivery Networks (CDNs). CDNs store both static and dynamic content at specific edge servers located in different parts of the world. This enables users to access content from nearby servers instead of relying on the origin server. By using CDNs, you can reduce the distance of content travel and achieve lower latency and faster performance in real-time applications.

Implement containerization, horizontal, and vertical scaling

Containerization and scaling allow the automatic management and addition of IT resources according to app demands. Here is how they contribute to handling latency and performance challenges in real-time apps.

Containers offer faster startup times compared to virtual machines, enabling quick application launch and scaling as per workload demands. This ensures real-time apps respond promptly to user requests without any delays. Containers also isolate applications and make each instance operate in its own environment. This prevents resource contention and reduces latency.

The most popular containerization and container orchestration technologies are Docker and Kubernetes. However, you can consider using other technologies depending your project needs.

Horizontal scaling distributes incoming requests across multiple instances. As the demand for real-time services increases, horizontal scaling adds more servers to the pool and ensures that each server handles a specific workload. This prevents individual instances from becoming overloaded and reduces response times. Moreover, horizontal scaling enhances app resilience by decreasing the impact of node failures on overall system performance.

You can adjust horizontal scaling in on-premise and cloud environments. To implement horizontal scaling on-premise, you need to deploy load balancers and ingress controllers to distribute traffic across servers and use automated tools to manage scaling dynamically. You should also invest in hardware that supports scalability, such as server clusters or virtualization tech.

To implement horizontal scaling in the cloud, you should use scaling technologies specific for a given cloud platform.

Vertical scaling upgrades the resources of existing servers to accommodate higher loads. By using this approach, you can increase CPU, memory, or storage capacity to meet the demands of your app. Vertical scaling can provide immediate performance improvements; however, it may eventually reach hardware limitations and become cost-prohibitive. It is necessary to combine containerization, vertical, and horizontal scaling strategies to optimize performance in real-time apps.

If you want to set up vertical scaling in an in-house infrastructure, you should deploy new servers with higher specifications. This means adding more powerful processors and increasing the server resources. If you want to scale up in the cloud, you should opt for specific cloud services that will help you add capacity to your current IT resources.

Measuring latency in real-time apps

You should consider latency as a variable that may change under specific conditions. It is necessary to continuously monitor and measure latency (including different geographical locations), and respond quickly to any issues. Various techniques and tools can be used to address latency problems effectively.

Application Performance Monitoring (APM) Software. New Relic, Datadog, Dynatrace, and other APM solutions monitor app performance and measure latency metrics. These tools track response times, database queries, and server-side processing, helping identify performance bottlenecks within an app.

Logging and metrics platforms. ELK Stack (Elasticsearch, Logstash, Kibana), Prometheus, or Grafana collect and visualize latency-related data. You can log timestamps for key events and monitor latency metrics over time. This will help you detect anomalies that may affect application performance.

Synthetic monitoring services. Pingdom, UptimeRobot, or StatusCake simulate user interactions and measure response times from different geographic locations. You can use these monitoring services to understand global latency patterns and optimize your app's performance for users worldwide.

Real user monitoring (RUM) tools. Google Analytics, Yottaa, or Raygun track latency experienced by users in real time. They can help you monitor user interactions, measure page load times, and identify performance issues specific to different devices, browsers, or geographic regions.

Developing a high-performance app with Erbis

Quick response time is crucial for modern software systems. In real-time applications, even the slightest delay can significantly impact the user experience, making latency unacceptable. Fortunately, there are several technologies available that enable instant data transfer and processing of large amounts of information. By carefully planning and selecting suitable tech stacks, you can create a high-performance application with close to zero latency, regardless of the volume of data and traffic.

At Erbis, we have been developing competitive applications for 11 years, specializing in highly loaded systems, scalable projects, and large data volumes. Our team comprises senior experts in the field of cloud technologies, software engineering, and smart solutions. If you're looking for a reliable technology partner to optimize the performance of your real-time application or create one from scratch, please get in touch with us.